Neural Engine, the increasingly powerful brain of Apple chips | iGeneration

It will probably be necessary to wait for the presentation of the new iPhone range, which could fall on October 13, for Apple to unpack the package on the A14 Bionic. The manufacturer has lifted part of the veil with the iPad Air 4 which integrates the chip, but there are still some unknowns, especially in terms of performance (fortunately, we can count on unofficial benchmarks).

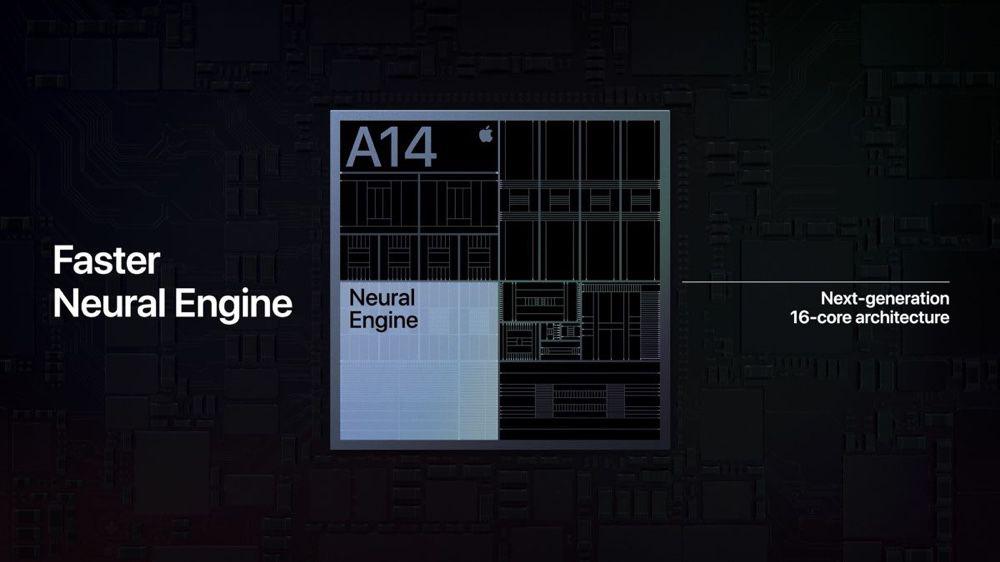

However, the manufacturer has provided some information on its latest system-on-chip (SoC), in particular on the Neural Engine, which includes 16 cores that can process a whopping 11,000 billion operations per second – compared to 600 billion operations of the integrated neural chip as close as possible to the SoC of the iPhone 8 and X, the A11 (whose nickname "Bionic" is taken from the Neural Engine) and to the 5,000 billion of the A12 and the A13 .

Accelerators are also present in the central processor to accelerate the calculation speed. The A14 boasts x10 speedup compared to the A12's CPU, which had two accelerators (just like the A13, which showed x6 speedup compared to the A12). Processing machine learning related tasks is twice as fast with the A14 compared to the A12.

Apps that rely on the Core ML framework for some of their functions will run wild. “It takes my breath away when I see what people can do with the A14 chip,” exclaims Tim Millet in the German Stern. Apple's vice-president in charge of platform architecture explains that Apple is making every effort to make this technology available to as many developers as possible.

Critically acclaimed education author, researcher and professor Dr. Gloria Ladson Billings will be join us to disc… https://t.co/zAeLmtnm7Q

— Shayna Terrell Thu Jul 22 15:02:14 +0000 2021

Apple has invested heavily in making sure it doesn't just “package transistors” that won't be used. "We want everyone to be able to access it," says the leader. Of course, Apple didn't invent artificial neural networks, a technology that's been around for years. But Millet points out that until recent years two things were missing for machine learning techniques to give their best: "a lack of data and computing power to develop complex models that can also process a large amount of data.

It is thanks to the work of Jony Srouji's teams and the changes made to the fineness of the engravings of its chips that the A14 is so well suited for machine learning tasks, which will obviously have to be checked on site ( read: The A11 Bionic chip in development since the iPhone 6).

Face ID is one of those functions that make great use of the Neural Engine, but as everyone has noticed, Apple's facial recognition system is disarmed when you wear a mask. Tim Millet apologizes: "It's hard to see something you can't see", speaking of the part of the face covered. Possibilities are possible such as recognition of the upper face, but "you will lose some of the specificities that make your use unique", he describes. Potentially, someone could unlock your iPhone more easily.

The problem is therefore “delicate”, agrees the vice-president. “Users want security, but they also want convenience,” and Apple is trying to make sure data is safe. The solution ultimately could be to combine Face ID with Touch ID, but for now it's cheese or dessert. Even though Apple continues to develop its fingerprint recognition technology (read: Integrating Touch ID into the iPad Air 4's power button wasn't easy).

How to Get Free N95 Masks from the US Government

GO

Codeco of December 3, 2021: the new measures target schools, masks, events, but not the horeca

GO

Sunburn: how to make up for the damage? - Miss

GO

Beauty coaching: can I apply oil if I have oily skin?

GO